It's hard to know what is preserved, and that seems to me quite a fundamental problem. I write this blog wearing my Crossref hat.

The point of the Digital Object Identifiers (DOIs) that we issue is that the link to the content is persistent. If the original publisher goes bankrupt or disappears for any reason, we can re-point the DOI to a successor publisher or to an archival source such as CLOCKSS or the National Library of the Netherlands, or Portico (or to multiple such services). The link will still resolve to the content. This is a way of ensuring persistent discoverability and accessibility in the long term.

But if there's no preservation service protecting the content to which a DOI is assigned, then when the publisher goes out of business, the DOI will stop working.

Now, in these situations people sometimes blame Crossref because the DOI doesn’t resolve. We become the most front-facing casualty of non-resolving DOIs. Yet the fault is actually with the publisher for not having adequate long term preservation arrangements in place. These arrangements are crucial for protecting the scholarly record.

We believe so strongly in the importance of long-term preservation that the terms of being a member of Crossref include a commitment to make best efforts to contract with, and preserve all content that is assigned a DOI, in an archive.

In the Crossref schema, when a publisher deposits metadata they can tell us which archives are preserving specific content. Very few publishers use this functionality, sadly, and even when they do we're not absolutely sure the truth of their assertions. It would be better to be able to trust but verify.

Using this assertion mechanism, it's perfectly possible for a publisher to say that material is preserved in CLOCKSS when it isn’t. So at present no one, including Crossref, can know for certain what proportion of content with DOIs is adequately preserved in a recognized and trusted archive. We don't know which actors, and which types of actors, are behaving well and which are behaving badly.

There's also a theoretical issue: who should be responsible for the act of preservation in the digital age?

We've seen the transfer from physical capacity where libraries would preserve actual things that they held, to a distributed licensing environment for intellectual property where publishers own and control the content, making them responsible for it. And indeed, as I said, at Crossref, we have terms and conditions holding publishers/members responsible for the preservation of digital content.

But without verification, we don’t know where the gaps are or if it is too late for some content. Have we lost a lot of content already due to inadequate preservation?

There's some better news.

There are some things we do have, and we do know already. The first is, there are great studies coming out all the time. Mikael Laakso has done some useful studies on open access journals and open access books. The more attention that we shine on digital preservation, I think the better, before it becomes an issue, and knowledge is under threat.

There is also the Keeper's Registry, but it's slightly challenging in several ways. The service was founded originally by Peter Burnhill at EDINA at the University of Edinburgh. They built a portal for mass data about the preservation status at the container-level (i.e. journals at the ISSN level). The service has moved and is now hosted by the ISSN International Center. The terms and conditions of its use stipulate that you can't use it for any purpose that isn't explicitly authorized. So, you can go and look up your journal, and that's great. More systematic study is difficult because there’s no API and you can’t query it automatically. (Although I have heard that a paid-for API is in development). Furthermore, there's no item level (i.e. article level) data.

The other change that the digital world has brought us is that most people don't read journals cover-to-cover anymore. They go for an article within a journal. But our infrastructures were designed around those traditional container formats. So, if you want to look up whether an article is preserved you must lookup the metadata for the container. So, you need to find the containing volume, issue etc.. Then you can look that up in the Keeper's registry and make sure that you're actually matching against the correct title with the correct ISSN, and so on. And then you can say whether or not it's preserved.

I think we can do better than that! This is a huge infrastructural deficit that is missing in the scholarly communications landscape at the moment.

I've spent the last few months building an item-level preservation database, looking at a range of sources that fuel the Keeper’s Registry, and working out how we can create a translation mechanism from individual DOI level data to this container level format.

The sources that I used were CLOCKSS, the Global LOCKSS Network, HATHI Trust, the Internet Archive, PKP-PN, Portico, and ScholarsPortal. Now, that's not everything that is in the keeper’s registry, but I'm the only person working on this so it was a good start and it covers a vast proportion of the material that we find preserved in the Keeper’s Registry. Some of the Keeper’s have 10 items total and it didn't seem worth my time to write a converter to get 10 more items when we're talking about millions of articles.

Also, if I was going to appraise this at scale and work out what preservation looks like, I needed some kind of grading system. It was really interesting to see that licensing contracts earlier specify 3 archives, because I haven't found a best practice document that everybody agrees on that says how much should be it preserved, and how much in how many archives.

I did a lot of reading around and came up with a gold, silver, bronze, and unclassified scheme for this work where, for example, gold members would be those who have 75% of the content they publish in 3 or more recognized archives, silver 50% in 2 or more, and bronze 25% in one or more. And then unclassified for everything that doesn’t meet that threshold. That's my unofficial Martin Eve grading system.™ I'm afraid it's not anything official, but it was a way of starting to classify digital preservation statuses across the two axes of percentage preserved and in how many archives.

Crossref Sampling Framework

Crossref has approximately 150 million metadata records for scholarly artifacts. For those who don't know: it takes a very long time computationally to process 150 million metadata records and do a look-up against them. It's not really feasible to do this frequently. It takes days and days and days to run this kind of query.

So, Crossref has a project called the sampling framework, where basically we can pull out 1,000 or so artifacts per Crossref publisher member, dump those somewhere, and use this as a representative sample.

I ran the sampling framework against the preservation database and made the data public. And just to say also that the final presentations of this data are all available in the Crossref Labs API. I ended up with about 7.5 million DOIs in the sample and thought this was a pretty good starting point.

So what does the preservation status look like for these 7.5 million DOIs? It’s not great. Using my classification system, Crossref has a huge number of members - 6,982 members (or 32.9% of total members) where we were unable to detect that they had any preservation arrangements at all.

It's not much better for the bronze category, which is publishers who are just doing about 25% in one archive. We are looking at about 12,257 members or 57.7% of all members. This does not, in my view, provide adequate redundancy for their preservation mechanisms.

It starts to get better when we get to the silver category, with 1797 members preserving at this level, with 3 archives for 75% of their content. That's 8.46% of the Crossref member base.

It seems to me quite shocking that that this is the case and I want to know what types of publisher are in each category because it will give us an indication of where we might need to focus outreach, identify who might need help with preservation, and what we might be able to do to intervene to improve the situation.

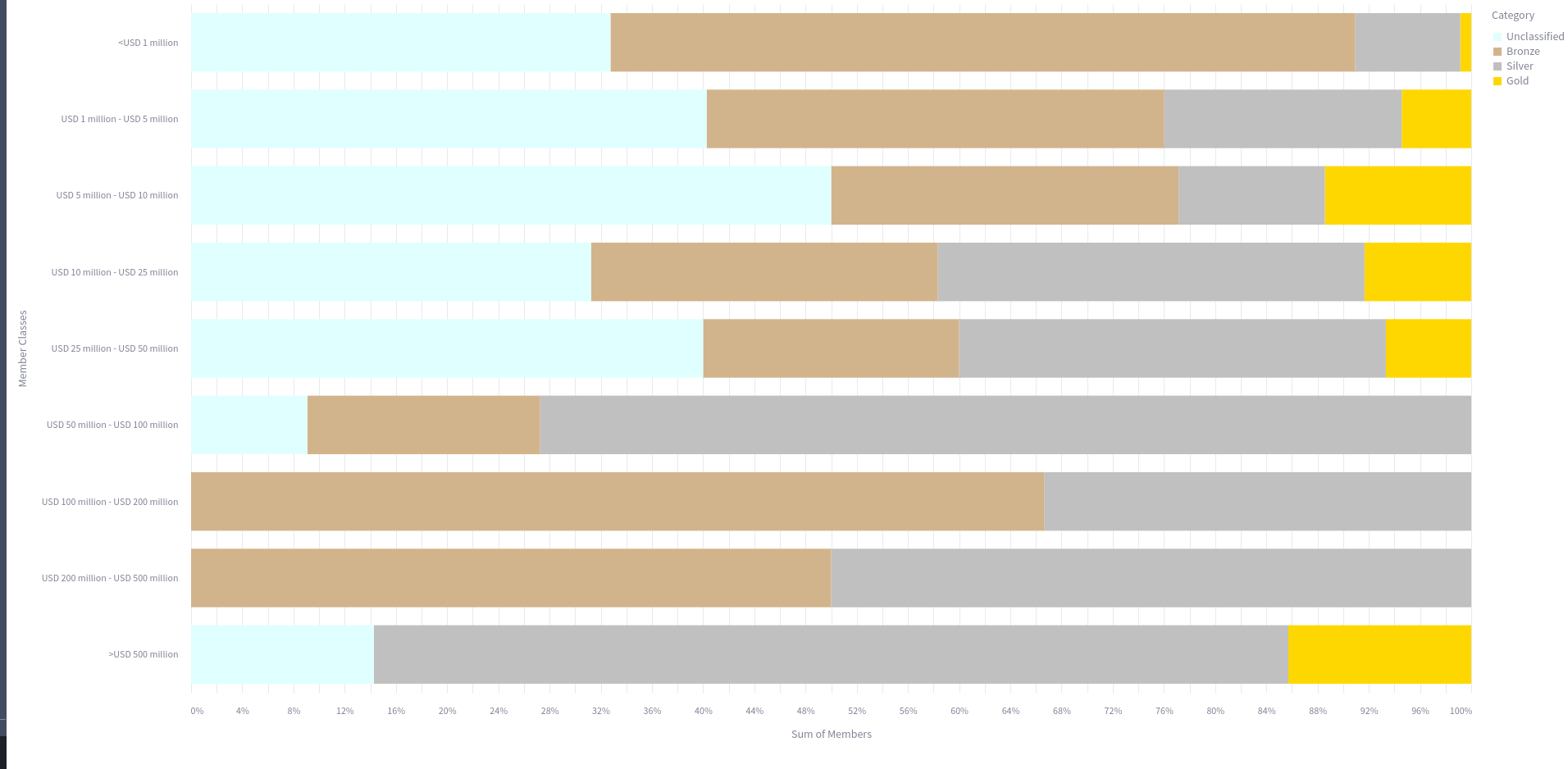

There are various ways you can categorize Crossref member publishers. One of them is by revenue. This is a chart that goes from lowest-earning publishers at the top through to highest-earning publishers at the bottom.

Now, i'd love to be able to say that the small boutique members take a lot of care and really do a good job on preservation. Some of them do. But it's not really the overall trend that we see here.

Taking silver as kind of the baseline, you can see a gradual improvement in the percentage of material published by each of these types of publishers where they are doing much better as a proportion in the silver bracket than any other.

That said, you can see that there is a pickup in gold publishers at the smaller end of this spectrum. And there's a group in the middle who are doing that, and we need to investigate who they are and have a chat. This group of smaller publishers are doing a good job!

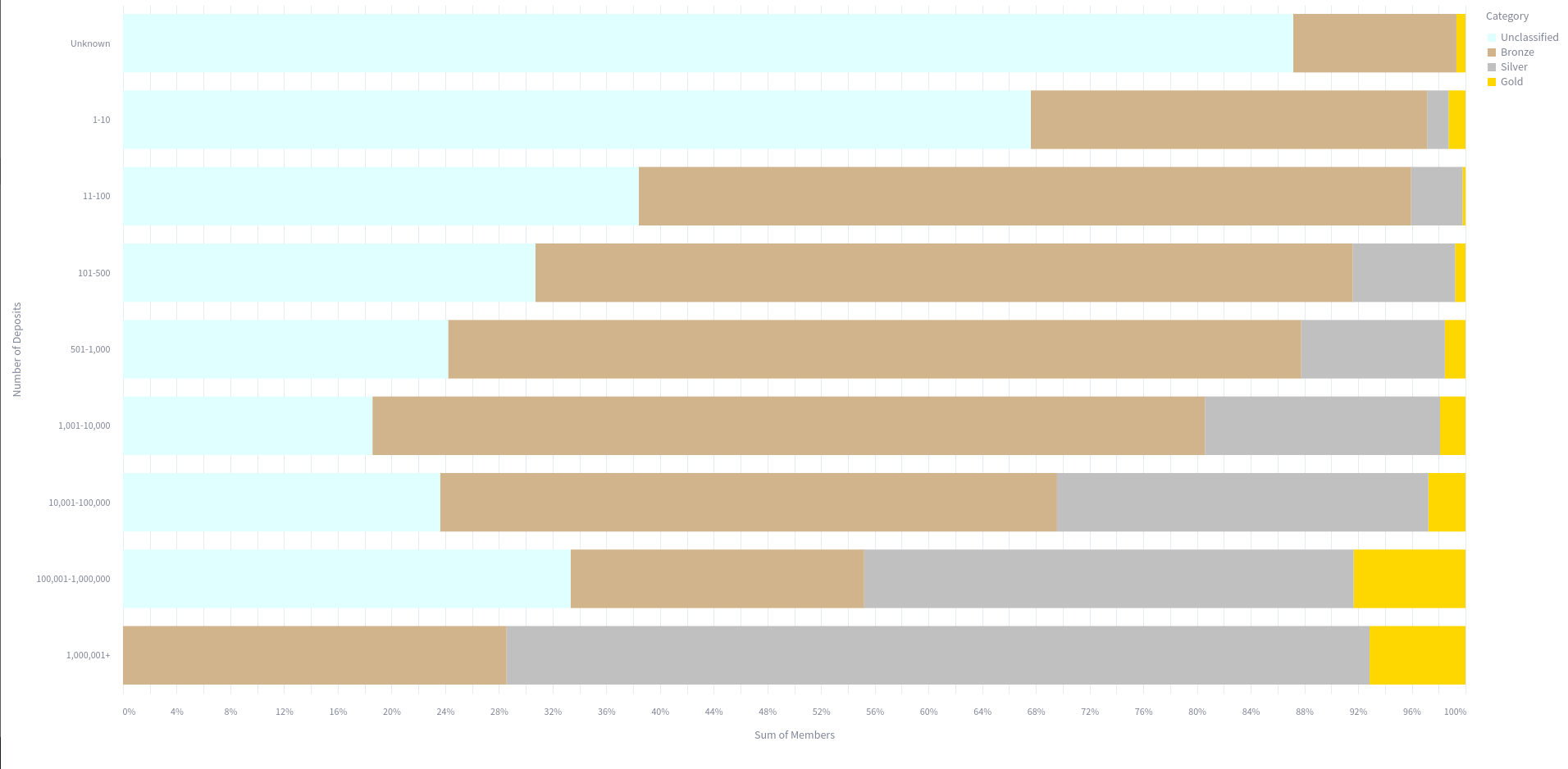

You can also measure a member’s size by number of deposits: how much people are publishing, not just how much money they're taking. Again, the trend is really clear. This silver bar at the bottom for the biggest publishers is a substantial portion of their preservation, whereas, in the smaller publishers the unclassified bars are substantially larger.

A quandary for outreach

If you just took these proportions, you might think we need to focus our energy on the smaller publishers where there's this huge proportion of stuff that is completely unpreserved. That's problematic, because it might be that some of these publishers only published 50 articles, and the bigger publishers published 50,000 articles. So, where you put your labour into these interventions is all about working out the economy of what you'll get in return for it and we can't let perfect be the enemy of good. We have to put our efforts where we're going to get the biggest return in preservation terms. That, for me, means identifying the publishers with the largest volume of content currently at risk.

You can also think about this in “works” terms. The good news is 4.3 million of the works we studied were preserved at least in one place. That's not utterly terrible, and this under-counts preservation, because we haven’t got data from every archive everywhere. I’m also not looking at green archives, although there’s still debate about whether such platforms can constitute adequate preservation. It is true that simple hosting in an institutional repository is not the same as triplicate redundancy preservation in dark archives.

I'm not sure many publishers want to hear this, but actually things like SciHub and LibGen do provide a preservation status. In some ways you can get access to material there that has disappeared from some formal areas. Not that I’m encouraging you to use those services, but there are shadow archives as well. As I have noted before, though, we cannot rely on the long-term stability of such platforms, which face constant legal threats.

However, the bad news is that there's about 2 million articles in this sample where I couldn't find any preservation status at all. This material is immediately at risk, and needs some kind of intervention to protect it.

We also excluded some works because they weren't journals, or they were published in the current year so they're not yet ingested into an archive, and so on. There is a whole series of criteria that I have made available in the final peer-reviewed article on this work and you can look up our methods.

Where do we go from here?

I think the most important next step, now that we have published our findings, is trying to do some direct member outreach. Again, though as I explained, this is a difficult thing to do. But, for instance, we have country level breakdown as well as some countries doing this better than others. We need to produce literature in specifically targeted languages, so that people in these cultures can be brought on board with our preservation efforts.

We also have an idea for a project for self-healing DOIs. The idea being that if publisher tells us about it, we could work with archives at the point where they register for a DOI to put open access content into archives automatically.

We would pilot this with open access content because it solves a heap of headaches around licensing and things that we don't want to get into. But this could be a prototype for a way to make archiving the default thing you do when you get a persistent identifier; underpinning that persistent identifier and making it persistent.

About Martin Eve

Martin Paul Eve is Principal R&D Developer at Crossref. He is also the Professor of Literature, Technology and Publishing at Birkbeck, University of London. Martin was also Visiting Professor of Digital Humanities at Sheffield Hallam University from 2019 until 2022. Previously he was a Senior Lecturer at Birkbeck, a Lecturer in English at the University of Lincoln, UK, and an Associate Tutor/Lecturer at the University of Sussex, where he completed his Ph.D. Martin is the external examiner/validator for MPhil degrees at the University of Cambridge.

Broadly speaking, Martin’s work centres on understanding different registers of knowledge and how they manifest in writing. Martin studies how literary reading techniques can be used to provide us with access to a set of differing epistemologies that all take inscriptive forms: historical, scientistic, digito-factual, and literary knowledges. This work is spread between contemporary American and British fiction, histories and philosophies of technology, evaluative cultures in the academy, and technological mutations in scholarly publishing. He is the author or editor of ten scholarly books.